UBA EXCHANGE

ETNT LOGINGT ZONE EXCHANGESTRIBEX EXCHANGES

Upon graduating from my M.S. program at Carnegie Mellon University, I joined Boston Dynamics in July 2023. Boston Dynamics continues to be at the forefront of advanced robotics development, pushing the boundaries of what robots can do.

I joined the machine learning team for Atlas. Atlas is the world's most dynamic humanoid robot, capable of performing advanced feats of agility and complex manipulation behaviors. Some of Atlas' achievements include performing backflips, parkour, and dancing, as well as practical tasks such as moving heavy automotive parts. My work revolves around researching imitation learning and machine learning techniques towards advancing manipulation and perception for humanoid robots. For more info about what our team is up to, check out some of the videos below!

SWORD ART ONLINE EXCHANGEDDGM 交易所VINLINK APP

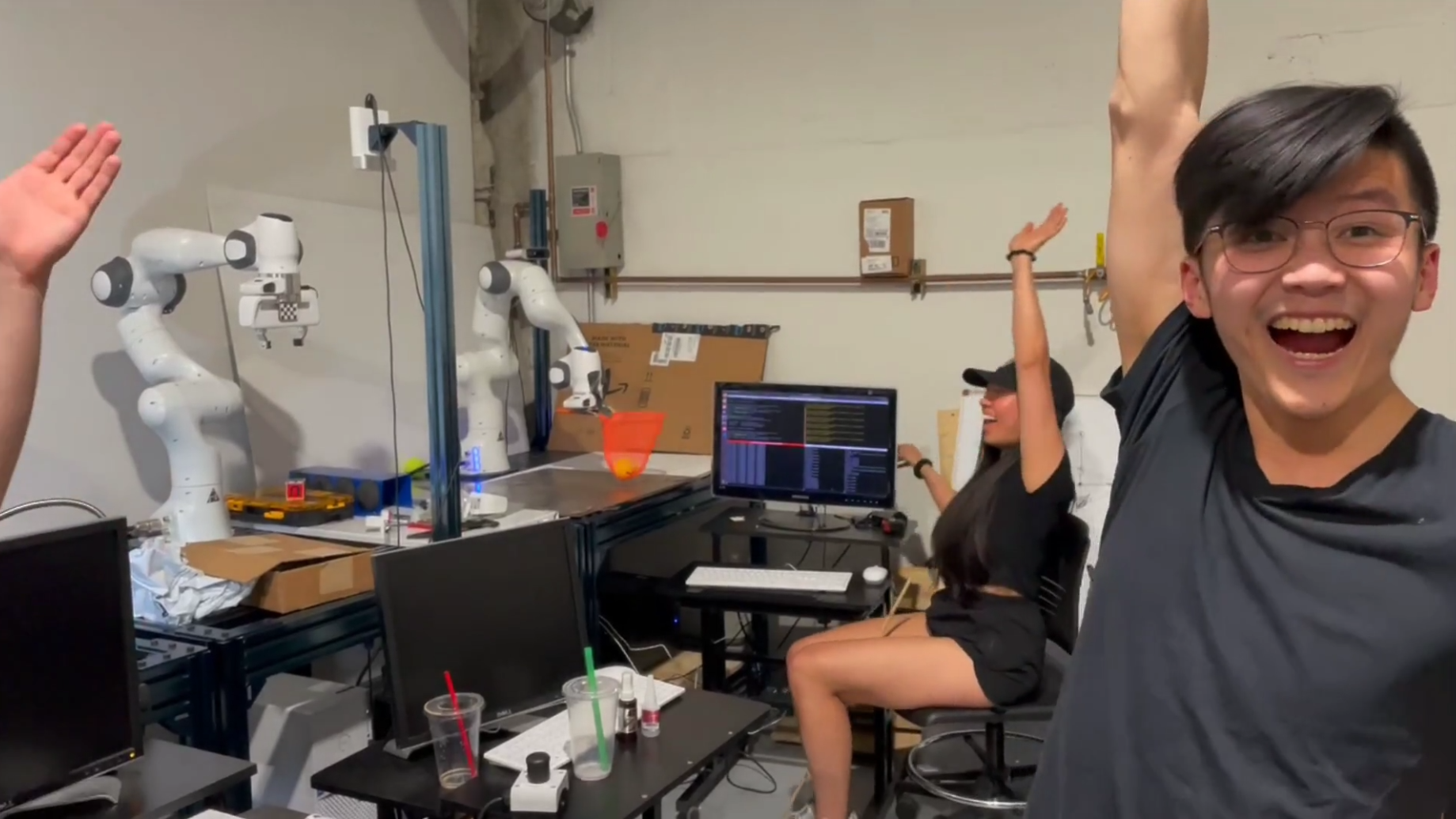

During the summer of 2022, I had the opportunity to join Everyday Robots as a Roboticist/Software Engineering intern. Born from X, the Moonshot Factory (formerly known as Google X), Everyday Robots is developing a generalized learning robot that can be taught to help us in the spaces that we live and work. The project has seen much progress and success towards developing intelligent robots capable of navigating complex environments, manipulating and interacting with general objects, and ultimately understanding how to assist people with everyday tasks.

Building a generalized learning robot means solving some of the most challenging problems in robotics. Motivated by Everyday Robots' mission, I joined the Robot Skills team, directly working on these challenging problems. This line of work was focused on truly unlocking the learning capabilities of robots, to develop an agent that can be taught new skills and apply them towards general tasks. Throughout my internship project I leveraged reinforcement learning techniques to help teach these skills to robots, experimenting with novel ways to improve their learning ability. One of my main contributions was developing new approaches for training policies, enabling faster and more intuitive task completion.

I really enjoyed the working culture at Everyday Robots. Everyday Robots (and X, the Moonshot Factory in general) is unique in that sense that it feels like a smaller startup within the broader context of a larger company (Google/Alphabet). What this means is that you get the best of both worlds. Despite being an intern, I felt that my work was highly impactful for the overall project and mission, was consistently challenged to solve difficult problems in innovative ways, and was further motivated by the fast pace of development. This is most typical of working at a smaller company. At the same time, I was also supported by the large-scale resources, streamlined development and training pipelines, and extensive benefits provided by operating within a larger company. On top of all of this, the team I worked with was incredibly talented, supportive, and approachable. I'm thankful to have met so many people with diverse backgrounds, and to have shared countless conversations with these people about their experiences of the past, and insights into robotics of the future.

I'm very proud of the work that I accomplished at Everyday Robots. The idea of a general robot that can learn to perform everyday tasks is incredibly inspiring. Everyday Robots is pushing the frontier of what is possible with robotics with a product that will someday revolutionize the world, and I'm very happy to have been a part of it.

While the specifics of my main projects cannot be publicly disclosed, in the meantime please enjoy this GIF of a fun little side-project I worked on during my time at Everyday Robots. Teaching the robot to perform a bottle flip was something I worked on in collaboration with a few other interns, and even got shared by Everyday Robots on LinkedIn. Pretty flippin' cool stuff :) I'm excited to see how far we can push the learning capabilities of robots in the future!

![Robot does a bottle flip [GIF]](projects/images/googlex/robot_bottle_flip.gif)

WHALECHAIN LOGINSOWL APPOX FINANCE APP

As I aspire to bring more intelligent robots to the world, one area of research that I've become more and more interested in is human-robot interaction. Traditionally, robots have predominantly operated in environments that lack human connection, for example manufacturing, assembly, warehouses, etc. And this has been for good reason: traditional robots excel at performing repetitive, high-precision tasks within well-structured, predictable environments. Humans on the other hand can be quite unpredictable, which adds significant challenge for robotics. These challenges are becoming more addressable with the advent of machine learning, though there is still a lot more to be explored. I believe that, for robots to truly revolutionize and improve the way we live on a day-to-day basis, we must design robots to be operable within human-centric environments. By studying the nature of interactions between humans and robots, we unlock the potential to develop robots that can better assist, understand, and learn from people in a more social setting.

Eager to learn more, I joined the Human and Robot Partners (HARP) Lab at CMU upon starting my Master's degree in September 2021. Directed by Dr. Henny Admoni, the HARP Lab's main area of focus is in human-robot interaction, with the goal of developing intelligent robots that can help and improve people's lives. In my reasearch I work most closely with PhD candidate Pallavi Koppol, with the primary interest of human-in-the-loop learning.

These days, robots are capable of learning from data, such as being trained on a dataset of facial recognition images or a series of human speech samples. Human-in-the-loop learning is an area of research that seeks to understand how robots can best learn from interactions with people, or how people can be more directly involved in teaching robots. This field is quite exciting to me, as it combines my passions in machine learning/reinforcement learning with human-robot interaction, and I do believe this to be an impactful area of study for the future of robotics. My most recent work has involved simulating teacher models to emulate human responses to robot queries, in developing a novel interactive learning algorithm. This work, titled INQUIRE: INteractive Querying for User-aware Informative REasoning has been accepted and published in the 2022 Conference on Robot Learning (CoRL). The INQUIRE algorithm proposed in this paper introduces a new method for interactive learning, capable of reasoning over multiple interaction types such as demonstartions, preferences, corrections and binary rewards. A robot can then choose an optimal interaction type to decide how to interact with its teacher, and receive feedback that is most helpful/informative to the robot based on the robot's current knowledge and state of the task at hand. I'm currently continuing research with the HARP Lab, investigating how robots can better communicate their understanding of what they've learned to a human teacher via various forms of paraphrasing.

FIFTY FIVE EXCHANGESBEST CRYPTO WALLETS 2023BITCOIN IPO

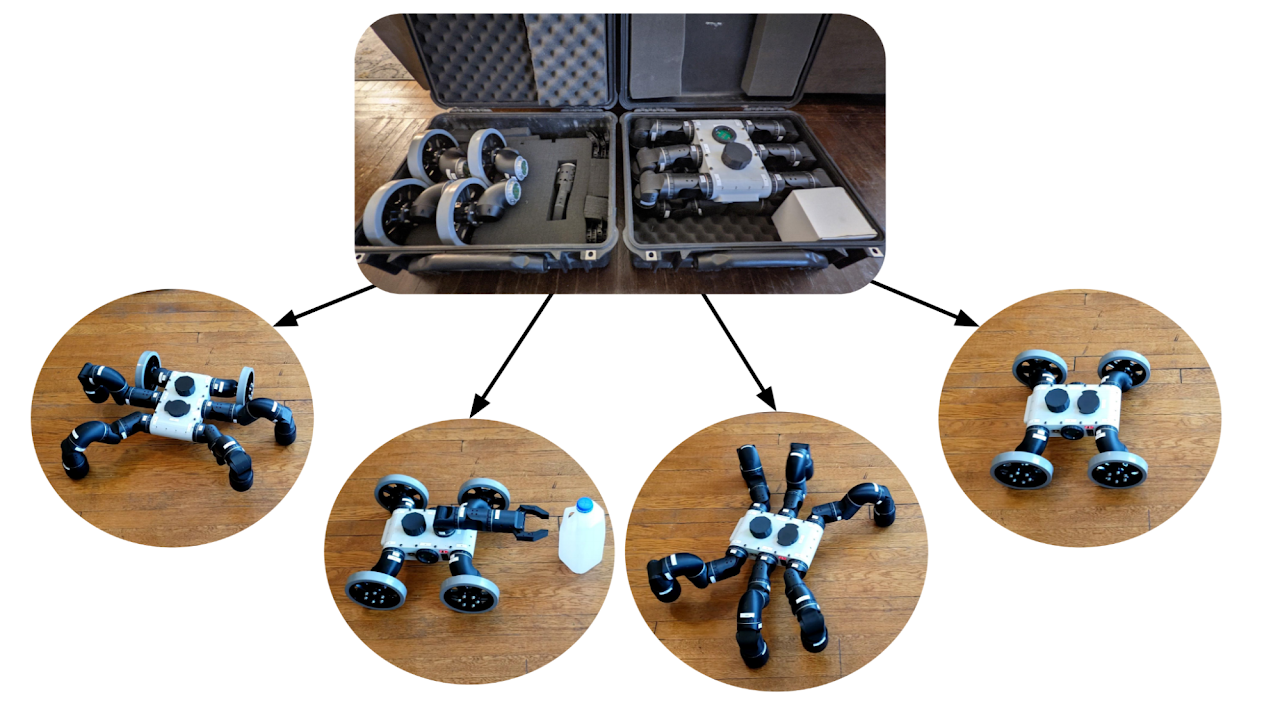

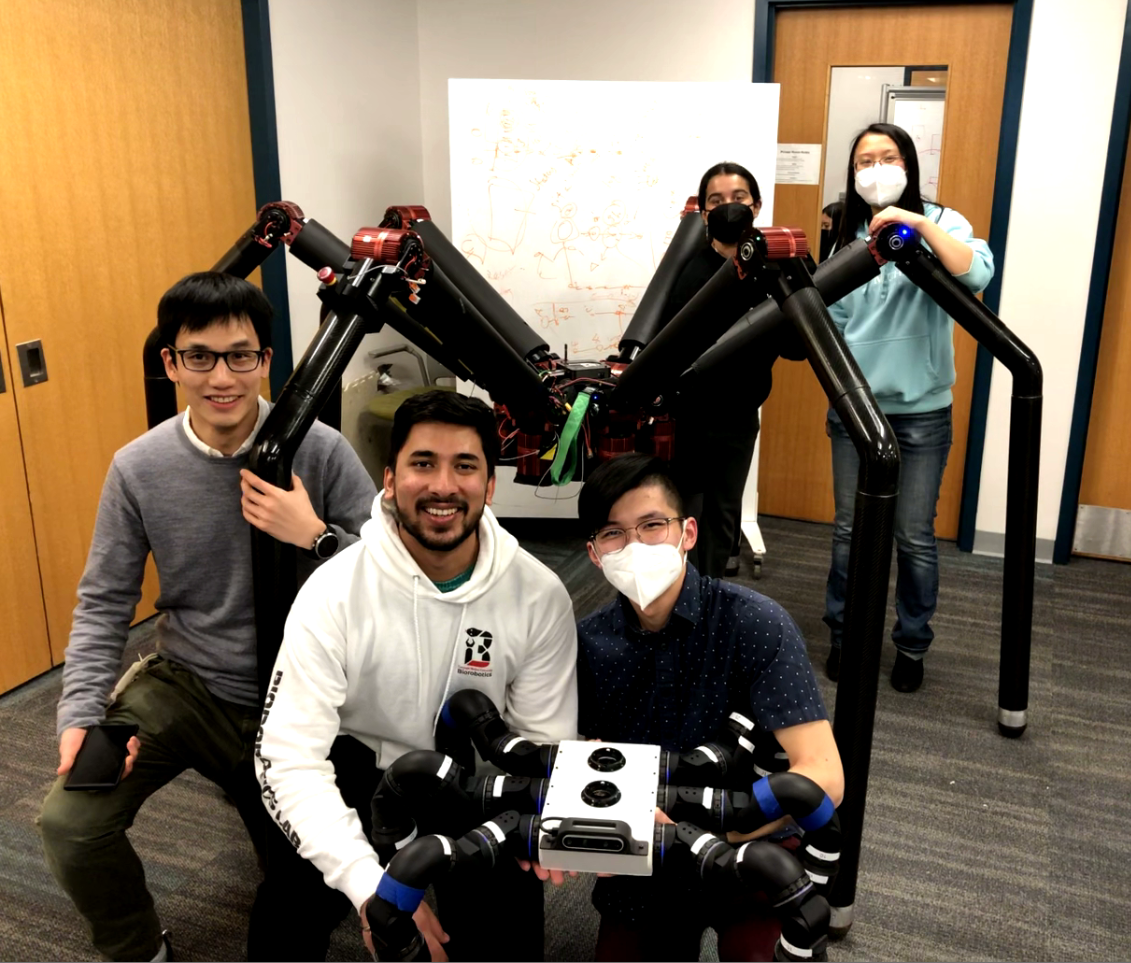

Upon starting my Master's program in September 2022, I joined the Biorobotics Lab at Carnegie Mellon University. Led by Dr. Howie Choset and Dr. Matthew Travers, the Biorobotics Lab seeks to develop robots inspired by biology, "to boldly go where no other robot has gone before". I specifically am working on the robot known as EigenBot, which is a robot centered around the concept of modularity. Several module types are developed for EigenBot, such as individual leg joint modules and wheel modules. These modules are self-contained, with their own PCBs to facilitate sensing, actuation, and awareness of other connected modules. EigenBot also consists of a central body which exposes several ports for interfacing with these modules. In a nominal configuration, one might choose to connect several leg joint modules to the body to create a hexapod/spider-like robot. However, these modules can be seamlessly swapped out for other modules to create all sorts of configurations, such as wheeled robots, gripper robots, or wheeled-legged hybrids. The limits are up to the user's imagination with EigenBot's modular design.

My contributions to the EigenBot project have been primarily on the software side. In my first year working on the project, I led software development and architected a software pipeline for EigenBot to incorporate more autonomy into the system, leveraging ROS. I developed robot perception capabilities for EigenBot, interfacing with an Intel RealSense depth camera for computer vision and obstacle detection. I also improved the modular gait controller for EigenBot, such that the robot's mobility could be controlled regardless of the specific configuration of modules (wheeled, legged, or some kind of hybrid, as shown below). Finally, I brought up a simulation environment for EigenBot in Coppelia Sim (formerly V-REP) to streamline autonomous software testing and development.

I also had the opportunity of demonstrate EigenBot's capabilities to the rest of the lab and others within the robotics community at CMU. It was incredibly satisfying to show off the results of our hard work in front of a public audience, and to answer a lot of insightful questions from keen students about the modular system design.

Now in my second year on the project, I continue to support the EigenBot team as a consultant and mentor. I've also assisted in onboarding new students working on EigenBot, and continue to provide resources and guidance from a systems engineering perspective. I'm excited by the growing interest in the project, and optimistic about the future of modular robots!

ZCX EXCHANGESCRYPTO SNIPING BOTPEPEBULLOFFICIAL LOGIN

Shortly after completing my undergraduate degree in 2020, I worked as a full-time robotics software engineer at Gatik AI. Originally my plan was to begin graduate studies at Carnegie Mellon University that September, but due to the COVID-19 pandemic this ended up getting delayed. Fortunately, during that time I had the exciting opportunity to join this dynamic startup, building software to control the behaviour of self-driving vehicles and make them more intelligent.

At Gatik AI, my main role was to develop software as part of the Planning & Controls team. This involved characterizing the vehicle system and dynamics in order to design and tune controllers for vehicle actuators (brake, throttle and steering) using classical control theory approaches, such as LQR and PID control. I continued to build upon these approaches by training a machine learning model that could predict the pedal actuation effort required to achieved a desired vehicle acceleration command. This learning-based approach was designed with scalability and fleet management in mind, allowing certain parameters to be determined directly from just 20 minutes of human driving data. In contrast, previous manual tuning approaches would often take hours on end. I primarily developed software in C++ and Python, using ROS2 to architect the robotics software stack for deployment, and Tensorflow-Keras for machine learning model development.

The developments I made on the Planning & Controls team encompassed a sizable portion of my work at Gatik AI, though being part of a high-growth startup meant that I often found myself taking on additional responsibilities. Another thread of development I was heavily involved with was autonomous vehicle simulation. I made improvements to vehicle dyanmics modeling in simulation, integrated the autonomy stack with simulation to streamline continuous integration, and worked with third-party service providers to develop new features for the simulation software.

I also assisted in the development of a teleoperation system, which allowed remote assistance to monitor the autonomous vehicle and provide inputs to influence driving behaviour when necessary. My work involved architecting the teleoperation component from a systems engineering perspective, and developing the appropriate ROS2 nodes that would enable communication between the teleoperator and autonomy stack. Safety considerations were a critical aspect of this system, since the vehicle must behave in a safe manner even when remote assistance is unavailable.

One other component I contributed significantly to was developing the software that interfaced between our autonomy stack and the drive-by-wire (DBW) system. I built ROS2 nodes that integrated with DBW systems (which were often different per vehicle) and communicated over the CAN bus to control and receive feedback from vehicle actuators. I also created tools and scripts that could be used for unit testing the DBW system, as well as debugging low-level issues by visualizing data in the CAN frames being sent and received. In addition, I was often involved with vehicle bringup and provisioning. This included configuring and calibrating the various sensors on the vehicle, setting up the drivers and software stack for the on-board computes, and testing of basic autonomous behaviours to validate that the vehicle is ready for deployment onto public roads.

Outside of software engineering tasks, I also had the opportunity to get involved with technical leadership. As one of the earlier members of the Planning & Control team, I led the team's quarterly planning, determining the appropriate tasks, breakdown and assignment necessary to reach milestones laid out for the quarter. I also initiated many technical discussions revolving around future work and development in terms of systems engineering, and drove sprint planning efforts.

I was incredibly happy with everything I was able to accomplish working at Gatik AI. I touched on many subjects throughout my time here, diving into topics such as control theory and machine learning, with much more depth than I have previously experienced. As I take everything I've learned to apply it directly to my studies at Carnegie Mellon, I look forward to watching the future of autonomous vehicles unfold for the betterment of society, and seeing Gatik AI continue to make headlines in the self-driving industry.

DAK EXCHANGEBOLIVARCOIN LOGINMNSTP APP

In the summer of 2019, I had the opportunity to travel to Cambodia to work for Demine Robotics, a startup company whose goal is to use robotic technology for the purpose of landmine clearance. Cambodia is one of many countries with an abundance of buried landmines and unexploded ordnances (UXOs) left over from war. There are an estimated 4 to 6 million landmines and UXOs in Cambodia, threatening civilian lives. Cleanup efforts thus far have been reliant on manual labour, in which human deminers search for the explosives using metal detectors or guiding animals, and then unearth the explosive using shovels and other hand tools. This process is not only laborious and inefficient, but also incredibly dangerous. Demine Robotics hopes to employ their robotic technology to keep human deminers safe from operating in life-threatening conditions.

My main role on the team was as the Autonomous Systems Lead. When I joined the team, the robot was entirely teleoperated, and the human deminer would control the robot remotely to excavate a landmine. Often times, it would be difficult for the deminer to accurately align the robot's excavation tool with the desired location for digging; a high degree of precision is required to avoid accidentally triggering an explosion. For this reason, there was a desire to enable autonomous behaviour in the robot that would independently align itself with the desired excavation point without the need for full human control. Throughout this project, I developed all the software and infrastructure required for motion planning, feedback control, and image processing. The system was extensively tested on a Clearpath Husky robot (at the time, the Demine robot was getting a mechanical overhaul and so a separate robot platform was used to test the autonomous driving software). Tests illustrated that the robot was able to align with a desired digging point within a roughly 4 cm tolerance, which was an acceptable margin of error based on the size of the UXOs and profile of the autonomous digging tool. The implementation of the autonomous alignment program was developed in C++ and used the ROS framework.

An additional project I led during my time at Demine Robotics was focused on the electromechanical design of a novel machine. This was an exciting experience for me, as most of my past experience pertained to robotics software development and I was eager to build my skills in a new area. When the Demine robot excavates a landmine, an entire clump of soil is unearthed, in which the explosive is contained. Before disposing of the dirt clump, it is necessary to sufficiently clear off the dirt to validate that there really is an explosive there. Since this process is also dangerous for a human to perform by hand, there was a need for a device that could perform the dirt removal process automatically. I led the mechanical design of this machine. Some of the design considerations I had included the size of the soil clump, how the machine would interface with the Demine robot's excavation tool, and as well the availability of materials and assembly processes in Cambodia. The CAD was completed in Fusion 360, and throughout the design cycle I continually asked for feedback from my team members to improve the design. Additionally, I designed the appropriate circuitry and microcontroller code for operating the device. We went through many prototypes of the machine, and by the end of my internship we managed to test a full-scale device that successfully separated the soil from a dirt mound containing an explosive.

My internship at Demine Robotics was not only a valuable technical experience, but also an incredible cultural journey. I took great pleasure in experiencing everything that Cambodian traditions had to offer, from interesting cuisines (we ate a lot of insects!) to traveling to popular landmarks and historic sites. At one point I visited an active minefield that was located immediately adjacent to a school for children. Needless to say, living in Cambodia opened my eyes to some of the social issues that I wouldn't experience back home. More than ever, I'm motivated to make a difference in the world through robotics. I truly hope to see Demine Robotics' mission come to fruition.

GSBL 交易所UNILAB NETWORK 交易所WFCA EXCHANGES

During the fall of 2018, I interned at Lyft Level 5, which is Lyft's autonomous driving division. The team was relatively new, having started in 2017, which made it an exciting and dynamic job with lots to do.

I worked on the motion planning team, more specifically working on the behavioural planner. The behavioural planner ingests information regarding the vehicle's state and surrounding obstacles to understand the semantics of the current driving scenario. From there, the vehicle is able to make smarter decisions when planning a trajectory, in order to adhere to road rules while also accounting for predicted behaviours of moving objects. One feature I developed focused on handling scenarios involving pedestrians and crosswalks. This included ensuring that the vehicle yields to crossing pedestrians as needed, while also ensuring that the planner is robust enough to perform with a high degree of safety, even when inputs are noisy or misleading.

Another project I worked on was the development of an automated testing framework to simulate several driving scenarios. In order to validate the functionality of the behavioural planner, it is ideal to have a set of simulations that can mimic vehicle interactions in the real world. I took the lead on developing these scenarios and designing test plans around them. One challenging aspect of this project was defining metrics that could be used to dictate the passing or failing of a test. It is difficult to measure the ability of the vehicle to follow laws and perform expected, yet complex, behaviours in a quantitative manner. Furthermore, the test framework had to be designed in such a way that simulating new scenarios and testing new features requires little manual overhead. These are all ideas that I kept in consideration as I developed for this feature.

I'm incredibly grateful to have been a part of Lyft's self-driving team. Autonomous technologies and robotics are a strong passion of mine, so getting the industry experience has been invaluable to me. I'm excited for the future of self-driving cars and the impact they will have!

OTIUM TECHNOLOGIES EXCHANGEARCHIVE AI LOGINVNT EXCHANGE

The SDIC (Structural Dynamics, Identification and Control) research group is a lab at the University of Waterloo, whose focus is to develop a robotics platform to monitor the integrity of bridges, buildings and other structures. I had the opportunity to work on this project as an Undergraduate Research Assistant, developing new features for their robot.

While I worked there, a Clearpath Husky UGV was being used to prototype the technology. The sensor suite mounted on the Husky included two LIDARs, IMU, GPS, cameras and more. I primarily worked on the software stack, using the ROS framework and PCL to improve point cloud processing coming from the LIDARs.

I worked at the SDIC lab on two occasions, during separate study terms in 2018 and 2019. During my first term, I worked on the implementation of point cloud registration techniques for the robot. As the robot moves, the LIDARs collect point cloud representations of the surroundings. Point cloud registration is the process of stitching together point clouds captured at consecutive timestamps to build an accurate 3D map of the environment. Another feature I worked on was a ROS node for LIDAR calibration, which allowed for easy modification of the relative transformations between the two LIDARs to minimize error between their point cloud orientations.

During my second term, I assisted in the development of a novel system for automated extrinsic calibration of robot-mounted sensors. The project involved using a Vicon camera system to track the position and orientation of targets placed on the sensors, relative to the pose of the robot. I developed software in Matlab and C++ to process the pose data retrieved from the calibration procedure, and optimize for the coordinate transformations of the sensors with respect to the robot using a GraphSLAM-based approach. Overall I'm happy with the work I accomplished and was able to gain new perspectives working on an advanced robotics project in a research setting.

BIFI EXCHANGETOTEM FINANCE 交易所42 COIN

I had the pleasure of interning at Apple's headquarters in Cupertino, California from January to April 2018. My job consisted of developing software to enhance the performance of Apple's operating systems across all platforms. This included modeling different percentiles of Apple product users, and developing automated tests to track performance regressions for the Core ML project. The tasks I worked on require a strong understanding of the operating system in order to make good decisions and evaluations regarding performance.

What I liked about working at Apple were the people. Everyone is hardworking and talented, and the workplace culture encourages collaboration and teamwork.

This was also my first internship outside of Canada, and it was a blast. Silicon Valley is full of inspiring people and places, and I'm happy to take in everything it has to offer. I'm taking every opportunity to try something new, and look forward to the experiences to come.

XEL APPCACHEGOLD 交易所COINBASE TRANSACTIONS PENDING

Avidbots develops robots for large-scale floor cleaning purposes. I worked as the Coverage Planning Co-op, which focuses on how the robot plans its route to clean an entire floor. Given a floor map and localization estimates, the coverage planner then plans a path from its start pose to a goal pose, while ensuring that the path covers as much floor as possible.

Ultimately, I developed software to improve the performance, path planning and decision-making capabilities of the robot. Some examples of features I implemented include improved collision checking when planning a route, automatic obstacle avoidance, and costmap clearing techniques to eliminate ghost obstacles. Other day-to-day tasks included running simulations to test the features I created, and bug fixes for unhandled edge cases relating to path generation failure. The tasks I handled required a thorough understanding of the planning algorithms used, and the interactions between different components of the robot system.

In my final month at Avidbots, I initiated a research project to investigate new methods of performing coverage planning in complex environments. In the context of robotics, coverage planning is a form of path planning in which a robot must plan motions to cover an entire space. One application of coverage planning is in floor-cleaning robots, such as those at Avidbots. My project focused on coverage planning for irregularly-shaped maps. I researched a variety of techniques for coverage planning, and implemented algorithms to test a selected method. After testing the program in simulation on an irregularly-shaped map, the results yielded a 24% increase in coverage area.

Working at Avidbots, I developed many skills relevant to the field of robotics, such as the use of Robot Operating System (ROS), creating software that interfaces with the physical world, and a thorough understanding of path planning algorithms and autonomy. I very much enjoyed the experience and would love to continue in this direction as a career path.

C LEVEL SUITE CRYPTO ARENASODIUM VAULT LOGINTKA EXCHANGE

WATonomous is a student design team aiming to bring an electric vehicle to Level 4 Autonomy, as part of the 3-year long SAE AutoDrive Challenge. WATonomous is the largest student design team on the University of Waterloo campus, with over 100 passionate students.

From WATonomous' founding in 2017, I worked as a software project manager and mentor, overseeing the software development required for the vehicle. I managed a body of 50+ students, making high-level decisions with regards to software tasks. This included technical tasks such as algorithmic development, structuring the data pipeline and research on autonomous vehicles, as well as managerial tasks such as ensuring all sub-teams are on track with term milestones. I also held seminars on autonomous driving and taught members how to use Robot Operating System (ROS).

Throughout this experience I gained a breadth of knowledge on the subject of self-driving cars and mobile robotics. Some of the development teams I led included path-planning, localization and mapping, object perception, prediction and more. To lead the team effectively, I became well acquainted with the algorithms involved in these tasks, as well as the required sensors and hardware. I was excited to be involved in this competition, working on the project throughout my undergraduate career. Our team did very well during the years of competition I was involved with the project, winning first prize in multiple different categories during the competition.

KEVACOIN LOGINBNN EXCHANGESCREO LOGIN

WonderMakr is a creative technology studio. I worked as a core member of the engineering team to bring a variety of machines and displays to life. I designed and built projects to create immersive digital experiences through the inventive use of technology. Below are a few examples of the projects I completed over the course of my 4 month co-op term.

1CHAIN APP

The Hendrick's Cucumber Organ is exactly what it sounds like: an organ played on cucumbers! This was my favourite project I worked on during my time at WonderMakr, since I led the project as the main technologist. I handled the system design and implementation of technology from start to finish, using capacitive touch sensors along with Arduino. The organ was showcased at the Toronto Eaton Centre as part of a Hendrick's Gin promotion.

THE RUG GAME LOGIN

I was heavily involved in the software development for this project: an interactive wall of LED strips that react to incoming tweets. Controlled by a Raspberry Pi, the LED Wall is able to listen for tweets containing a certain hashtag, identify emojis in the tweet, and display the emoji using LEDs. It is also capable of processing and displaying images (including animated GIFs) that are uploaded to the Pi via a local webpage.

IECT 交易所

This arcade-style machine features a virtual slots game. The goal of the game is to match the colours of cars traveling down three highway lanes, simulating a round of slots. For this project, I was tasked with circuit building and programming the microcontrollers.

NWC EXCHANGE

I was responsible for the hardware integration of WestJet's "Virtual Baggage", which was the focus of the above commercial. The unique baggage allows WestJetters to communicate with a traveling family through live-streaming suitcases and golf bags.

COIN STOCK FORECAST

I led the circuit design and prototyping for an LG V20 phone display. Users would be able to compare the audio quality of LG's V20 phone with a competitor's phone by turning a rotary switch. The switch controlled the sound output through headphones as well as LED light strips. Above is a photo of the work in progress.

BEE360 APPBTCVB EXCHANGESEQUAD APP

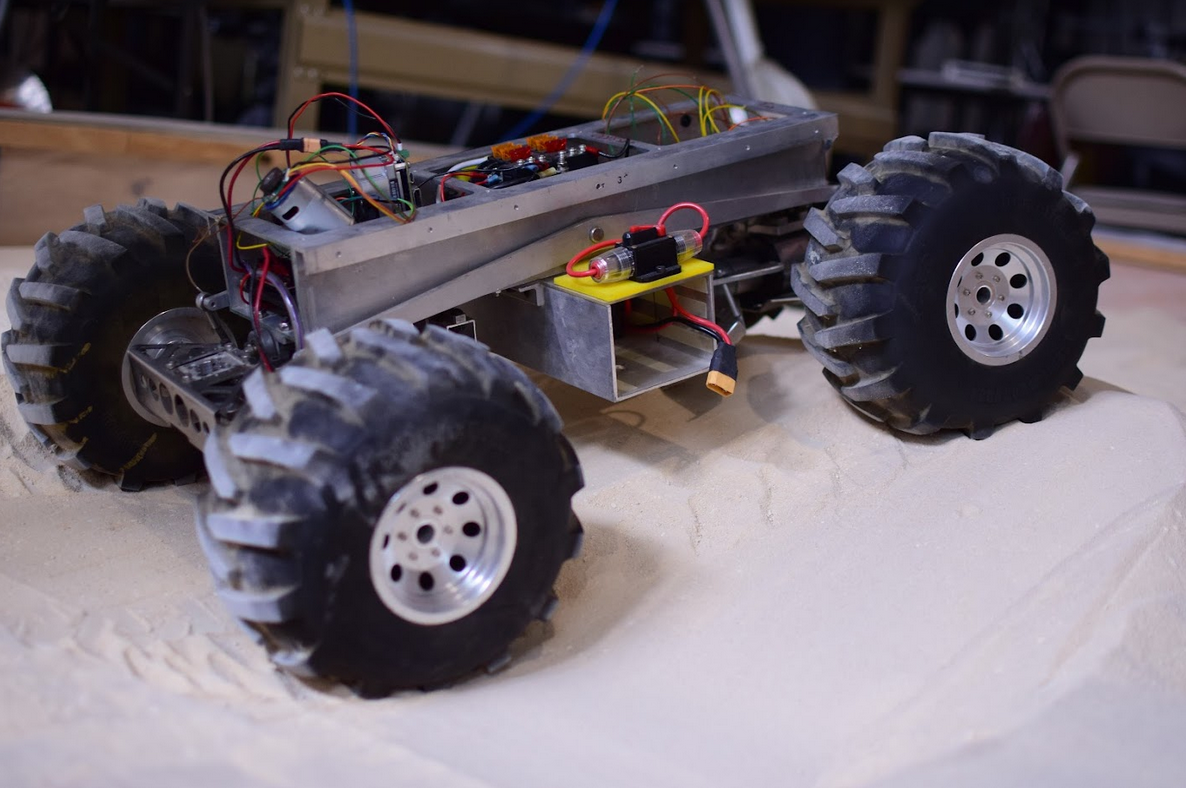

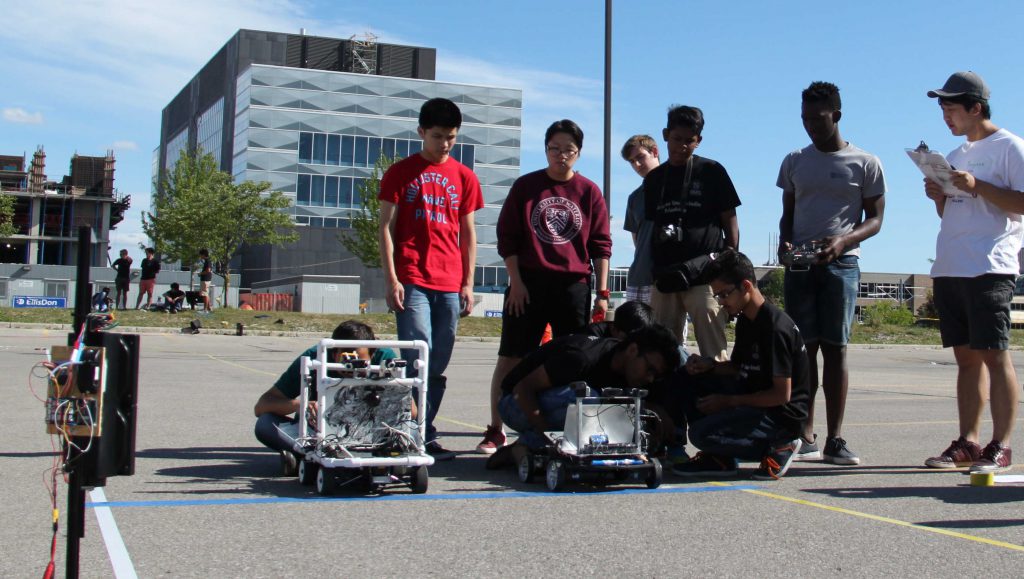

In my first year of university, I joined the University of Waterloo Robotics Team (UW Robotics). The team builds robots to compete in a variety of competitions. While in first year, I was tasked with leading the fabrication of an autonomous RC car-sized vehicle to compete in the International Autonomous Robot Racing Challenge (IARRC).

The IARRC is held annually at the University of Waterloo, where teams come from around the world to compete in racing challenges. The autonomous vehicles can participate in a drag race and circuit race.

Our robot is pictured on the left. It features a light-weight aluminum chassis, roll cage made from PVC piping, three cameras and a LIDAR for obstacle detection and path planning.

Throughout this experience I was able to learn a lot about robotics and autonomous systems. While I mainly focused on fabrication as the mechanical lead, I also had the opportunity to help the software team, learning about computer vision and using the ROS framework. The competition further strengthened my passion for mechatronics and was definitely one of my most memorable first-year experiences.

FEAR NFTS 交易所EBST EXCHANGEPEPE COIN BSC LOGIN

In 2014, I co-founded Urbin Solutions, which was what truly brought out my passion for robotics. It started as a project from SHAD, a Canadian summer leadership program for high school students interested in business, engineering and/or science.

We were challenged to come up with a business idea that would help Canadians reduce their footprint. Our solution was the Urbin, a self-sorting garbage bin which would reduce the amount of recyclable and compostable waste being sent to landfills, without changing human behaviours.

As one of the Head Engineers, I built two prototypes for the Urbin using Arduino microcontrollers, along with a colleague. We researched and experimented with a variety of sensors to produce our first prototype (left).

The prototype was able to sort paper and food from other types of waste, and used a servo-based swivel system to mechanically sort materials. We later improved our prototype to build a second module that could additionally identify metal, and added a conveyor belt system (right). A video of the second module, which was displayed at a trade show, can be found here.

We pitched Urbin Solutions at many entrepreneurial competitions to promote our ideas and accumulate funding. Some of the awards we received include:

- Shad Valley Entrepreneurship Cup: Best Prototype

- Waterloo Innovation Challenge: Highest Degree of Innovation

- Make Your Pitch 2015

- Young Entrepreneurs Competition 2015

- Manitoba High School New Venture Championships: Top Idea Pitch

We had a pretty good run with it.

The project was disbanded at the end of 2015, as many of the team members dedicated more time towards university studies. Nonetheless, I am grateful to have been part of this venture from start to finish. It has sparked my interest in engineering and entrepreneurship, taught me to be curious and innovative, and has driven me to pursue a career in the field of robotics.

ETHER LOGINJOINTER APPPOSITIONAL WARFARE EXCHANGES

Getting involved with FIRST Robotics was what propelled me into the field of mechatronics, robotics and AI. When I began high school, we did not have an established robotics club. I was one of the founding members, and was later designated the team leader as a Grade 9 student. Our club focused on building a robot to compete in the FIRST Robotics Competition (FRC), in which many high school teams brought their robots to participate in a sports-like challenge.

We competed in a basketball-based challenge. Matches were 3-on-3, with robots from different high schools taking the court to play against each other in a timed match. Robots could score points by shooting balls into baskets or by balancing on bridges in the middle of the court.

Our robot was designed for bridge balancing, with a powered arm and small size for fitting multiple robots onto a single bridge (see video here). We were the first team in North America to successfully balance all three robots onto one bridge.

Yes, it was quite exciting to watch our "Little Robot That Could" perform on the court. We advanced to the quarterfinals of the competition, and also won the Judges' Award. I could not have asked for a more thrilling experience to kick-start my career in robotics.